Framework for Estimating 3D Scene Flow from Stereo Images

Framework for Estimating 3D Scene Flow from Stereo Images

Framework for Estimating 3D Scene Flow from Stereo Images

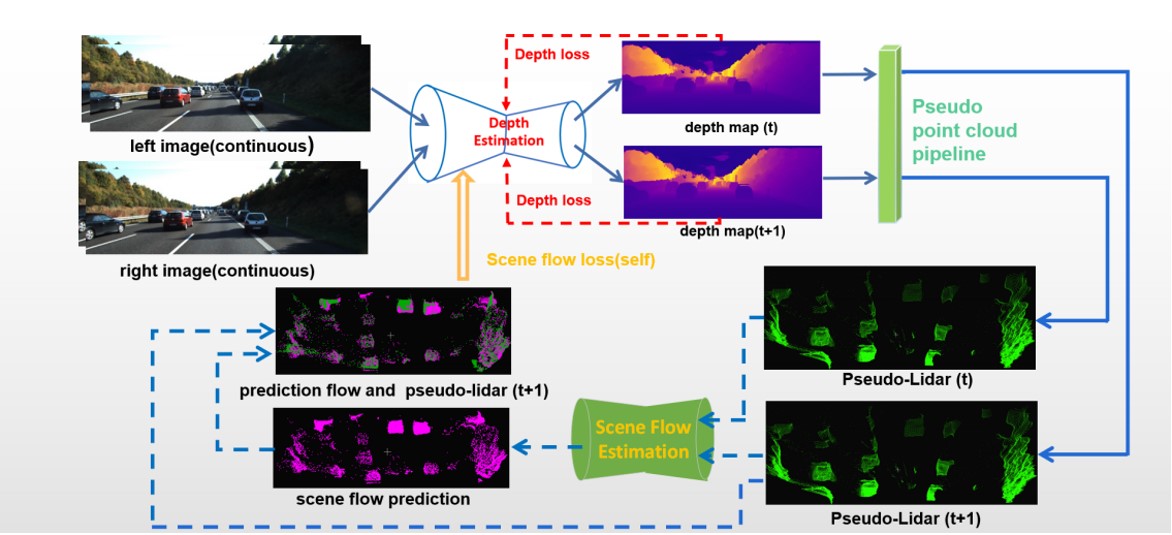

2020-11-01 ~ 2022-07-01 3D scene flow characterizes how the current point in time flows to the next point in time in 3D Euclidean space. Scene flow has the ability to autonomously infer the non-rigid motion of all objects in the scene. Current state-of-the-art technologies for 3D dynamic scene perception are more dependent on sensors like LiDAR. The color camera used in the KITTI dataset costs no more than $800link , while the Velodyne HDL-64E LiDAR sensor costs about $75,000link . Autonomous driving or robotics also result in increased costs due to being equipped with LiDAR. The stereo camera system can provide a low-cost backup system for LiDAR-based scene flow estimation solutions. The details of the responsibility are as follows:

- A new self-supervised method for learning 3D scene flow from stereo images is proposed. This project is the first to introduce stereo image-based pseudo-LIDAR to 3D scene flow estimation;

- Generating pseudo-LiDAR point clouds based on the existing depth estimation algorithm is completed. Neighborhood point statistics method is proposed to filter the noisy points in the pseudo-LiDAR point cloud;

- Completing the full code writing of the proposed unsupervised learning algorithm for 3D scene flow;

- Responsible for all the writing of the paper.

The exploration made by this project in 3D scene perception is shown. The proposed method is demonstrated on the public KITTI dataset to accomplish the estimation of motion vectors of 3D points in the scene from binocular images only.