Self-supervised Learning for Dynamic Scene Perception in Robots

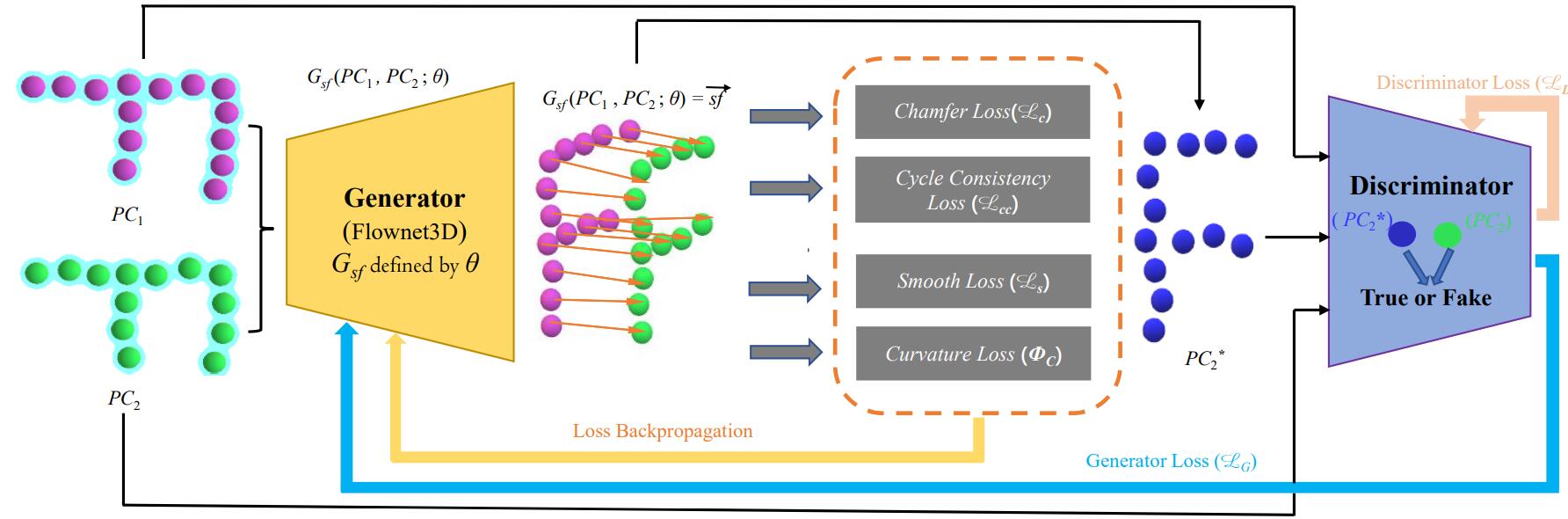

Overview of the proposed framework

Overview of the proposed framework

2020-12-01 ~ 2021-12-30 This project is focused on alleviating the problem of high cost and poor accuracy faced by dynamic scene perception of robots. A new self-learning network of scene flow is designed. 3D scene flow represents the 3D motion of each point in the 3D space, and its can provide basic 3D motion perception for autonomous driving and server robots. The details of the task are as follows:

- A novel framework for self-supervised learning of 3D scene flow is proposed, in which the generative adversarial idea is introduced to learn 3D scene flow. The code implementation of the adversarial learning part between the scene flow generator and the point cloud discriminator is completed. It makes the point clouds generated by the generator more and more like the real point clouds, so that the scene flow estimation becomes more and more accurate.

- Four different types of point cloud discriminators are designed. The best discriminator structure is verified by many experiments.

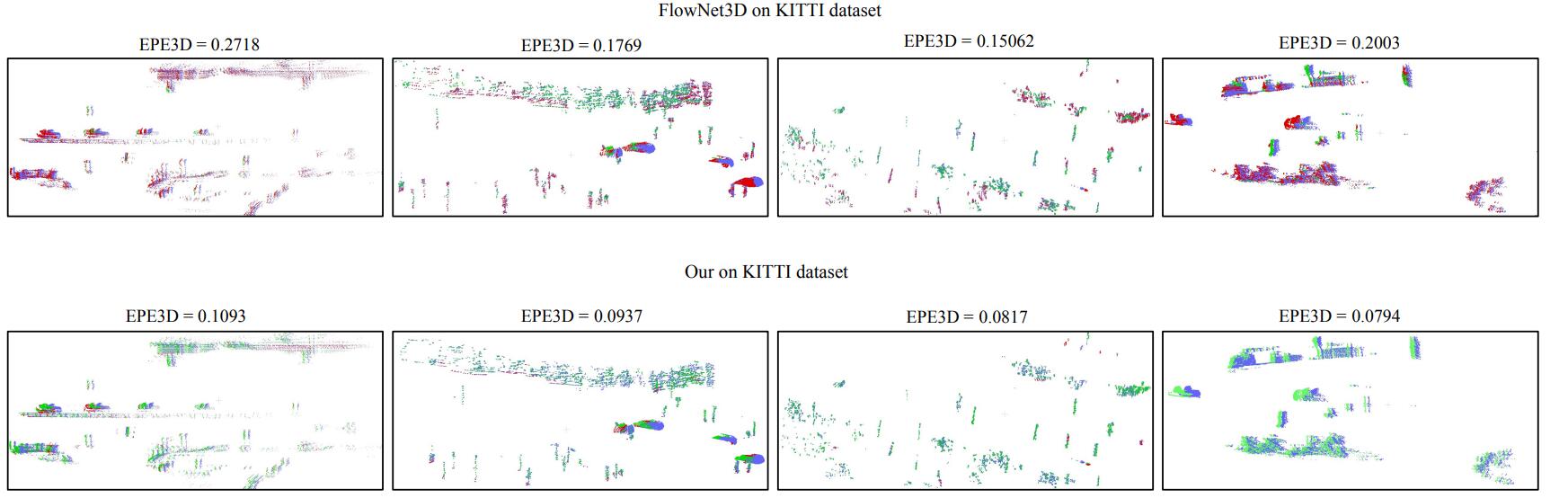

- I am mainly responsible for the experimental part of the scene flow estimation framework. Experimental results on the public KITTI dataset show that introducing adversarial learning ideas in scene flow estimation is effective in improving the performance of scene flow estimation.

Accuracy of visualized 3D scene flow evaluation on the public KITTI dataset. The correct points are shown in green and the incorrect points are shown in red. From the experimental results, it is clear that we have made some exploration for the project to improve the accuracy of 3D scene perception.